How do you know what works and what doesn’t? Growth isn’t achieved by hacks

and tricks, but processes and optimisation. Brian Balfour talks through

Hubspot’s project cycle and how they turn experiments into success.

Transcription

The first part of my deck repeats a lot of what James said, which was not on

purpose, but it’s really good because what I dive into in the second half

builds on a lot of what are you saying, and goes into the sort of that next

level of detail.

And so a lot of what I’m talking about is not just about viral experiments,

but we use this foundation for everything that we do on the growth team at

HubSpot.

Not just virility experiments on some of our new products, but content

experiments, Facebook Ads experiments, things that we work on with retention.

Everything that we do so similar James that the number one question I am

always asked about growth is:

“What tactic would you use to grow my company?”

And when I get asked that question, my initial response is “I’ve got no clue.

I just met you I had know nothing about your company, I know nothing about

your audience, I know nothing about your product.”

And typically the reason I get this question because it comes from a sense of

— they’re looking for one tip, one secret, one hack, one tactic to unlock this

explosion of growth in their business. And, unfortunately, my answer to what

tactic would you use to grow the company is not what most people think it is.

Growing your company through process

It has nothing to do with tactics, and it has everything to do with process.

And when I mentioned the word process to most entrepreneurs, their eyes rolled

back in their heads, or they glaze over, and they’re like “Man I don’t want to

even listen to you.”

But, what I want to dive into first is four reasons to focus on process

before tactics. And the first reason is a lot of what James says,

“What works for others is not going to work for you.”

Your audience is different; your product is different, your business model is

different, your customer journey is different.

-

Bottom line, your business is different plain and simple

You need a method — you need a way, you need a process to find that unique combination of things that are going to work for you, not somebody else.

And so as you listen to a lot of the tactics today, a lot of the great

speakers, something I encourage you to do is take a mindset of using that as

inspiration or as input to this process, and not necessarily prescription. -

Growth

In a lot of cases, it ends up being the sum of a lot of small parts. I’m sure a lot of us, every week, are probably reading TechCrunch, VentureBeat, one of these tech publications, and we see some company with some growth curve like this. And all of us are sitting there and wondering “What happened there? What happened right before this exponential curve just took off?”

But the real questions we should be asking and digging into it’s “What are

all the little things that they did to get to that point? That aggregated to

get to them to that explosion of growth, and then what are all the little

things that dated afterwards to continue to accelerate and keep that growth

curve going?”

Because at the end of the day, as James said,

“Silver bullets don’t exist.”

You will certainly have experiments and tactics that you do that will end up

being outliers, but chasing silver bullets is kind of like chasing unicorns —

it’s a waste of your time.

You want to be aggressive, but remember at the end of the day, it’s not going

to be one thing that’s going to change the growth curve of your business.

-

Rate of change is accelerating

If you look at the past year/18 months, even a shorter timeframe, we can pick any acquisition channel, and we can probably name multiple reasons about how the foundation of those channels is changing.

SEO — they’re taking away keyword data, they’re introducing AuthorRank

into their algorithm, content freshness, social sharing, Google’s massively

changing the search result format.

Display continues to be disrupted by RTB browsers are taking away cookies, and

of course, there’s this whole mobile thing that’s completely changing the

game.

Email — Google is experimenting with emphasizing unsubscribe buttons,

tabbed inboxes, inbox actions, of course, mobile changes the format.

We can go a through any one of these channels, and we can probably name

foundational changes in the past six months.

More than that and, of course, I’ve got a slide from a presentation I saw

James give a while back, is that the number of acquisition channels, not just

in not just in virality, but paid acquisition, they come and go.

More and more are becoming available to us over time. The point is is that

things are constantly changing, and so you need to be constantly changing,

and you need a way to constantly be finding the new things that are going to

continue to accelerate the growth of your business.

-

Build a machine

The machine, to me, is something that’s scalable, somewhat predictable, and definitely repeatable.

And so if you think about this in the framework of the machine, the machine

produces the tactics, the tactics are the output, so what makes the machine

What what are the inputs of the machine?

And that’s the process that you drive to basically find and discover and test

all of these tactics.

The Process

The goals of this process — there’s really four primary goals that we try to

achieve with this process at HubSpot.

Rhythm

So James talked about a lot about how you have to be able to sustain failure.

Well, momentum is a very powerful thing, and so you want to establish a

never-ending regular cadence of experimentation to basically fight through the

failures, to find the successes, and really find that momentum that keeps

carrying you forward.

Learning

The second, and then probably the most important, is learning — continuous

learning. Basically, that constant learning of your customer, your product in

your channels, feeding that learning back into the process to continue to

build off of that base of knowledge, is what’s going to continue to drive you

forward. Finding the successful experiments in the successful tactics.

Acountability and Autonomy

If you’re building out a growth team with multiple people, I believe these two

things are really important. So, as you’ll see individuals on the growth team

get to decide what they work on to achieve the team goals that we set, as the

team leader, I’m not providing a very specific set of directions.

But with autonomy comes accountability, and so people on the growth team

— they don’t have to be right all the time, their experiments don’t always

have to be successful, but there’s an expectation that they improve over time

in terms of their knowledge of the customer product and channels, and that we

continue to find more and more successful experiments, as we build off of this

base of knowledge.

So, before we dive into what we do on a day to day, in a week to week basis,

we first need to understand where we’re going. And so the framework we use to

set goals on the growth team is something they’ll probably a lot of you are

familiar with, it’s called the OKR framework.

It was invented by Intel, popularized by Google, used at Zynga and many of the

other high-growth companies here. What OKR stands for is ‘Objective and Key

Results’, I’m not going to dive into details here, but how we set these goals

is basically:

First, we take a step back and we ask the question “What is the one area that

we can work on, or the one thing that we can achieve that drives the biggest

impact on our growth curve right now?”

And taking this step back and really understanding this question before we

actually dive into the individual experiments is really important. So we

figure that out, we then set a time frame, it’s always 30 to 90 days. Anything

shorter than 30 days, we’re probably not being aggressive enough and big

enough with what we’re trying to achieve. Anything longer than 90 days, we’re

probably biting off too big of a chunk.

And so we always try to basically set this objective to something that we feel

like we can reasonably make significant progress on in 30 to 90 days, and then

we set three key results. These are quantitative measurements that give us

evidence that we basically achieve this objective.

And the key results are set basically in order of difficulty, and so KR1 is

basically like “Hey we did a good job”. We tend to hit our k1, we try to set

these goals so that we hit our KR1s ones about 90% of the time. KR2s are like

“Okay we went above and beyond and we did a really great job with this.” We

hit those about 50% of the time. And our KR3s are like “Man we we knock this

thing out of the park, right, let’s go like to Vegas and celebrate” and we try

to set these so that we only hit these about about once a year.

If we’re hitting our KR3s more than once a year, then we know that we’re not

setting goals aggressively enough, and if we’re not getting our KR1s ever,

then that then, basically, we’re probably setting it too aggressive — so

finding the sort of that happy medium.

So, after we tour sort of take this — step back and really think about what is

the most impactful thing that we can do, and basically set some goals against

it. We really try to dive into this 30–90 day period as fast as we possibly

can. And so this is where kind of the day-to-day in the week-to-week process

comes.

So, first I’m going to basically give you a high-level of the things that we

walk through, then we’re going to dive into each step, and then we’re going to

take a step back out and talk about how we look at this thing for more of a

macro-level.

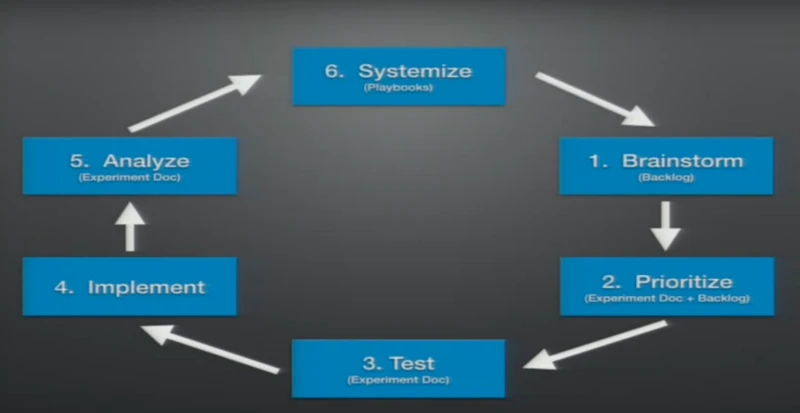

So, the constant steps in the cycle that we go through after we set these

OKRs:

– Brainstorm

– Prioritize

– Design tests around the things that we prioritize

– Implement those tests

– Analyze those tests

– Take the successful ones and systemize them

– Take that analysis and those learnings

– Feed them back into the process

So, let’s dive into each one of these individually. And so, along this

process, there’re really four key documents that we keep that really help us

drive this process.

Backlog

This is just a place for us to dump all of the ideas that we have in the

growth. Anybody on the team — it’s fully public — anybody can contribute ideas

to the backlog, and the main purpose of the backlog is to provide us an area

and outlet. Basically for us to dump our ideas as we go along in this

experimentation cycle, we’re going to find a bunch of new ideas, and instead

of trying to remember those ideas and keep them in our head, we basically

provide an outlook to empty our headspace and make sure that we’ve recorded

the ideas that we can come back to later.

Pipeline

This is basically a ledger of experiments, it’s all of the experiments that

we’ve ran previously, as well as the ones that currently running, and the ones

that are on deck, and of course the highlights of their results. So, this

pipeline is used when when somebody starts on our team, they can literally go

to this pipeline and see from day one what is every single experiment that we

ran through to get to where we are today.

Experimentation docs

Every experiment gets one of these, and this is probably the most important

document out of the four. This experiment doc basically forces us to think

through the important elements such as: why we’re doing this experiment versus

others, what we expect from this experiment, how we’re going to design this

experiment, and implementing successfully. Then, of course, record those

learnings that are so important to the entire process, and we’ll take a look

at one specifically.

Play Books

Basically, anything that we find that’s successful, we first try to productize

it with software and engineering, but if it’s something that’s done more

manually — like we find a way to that works really well to promote a piece of

content — we’ll systemize these into Playbooks.

And these are just basically step-by-step guides for things that we want to

repeat over, and over, and over. All of these documents, we personally use

Google Docs for this so that we can share it with the entire team, we can

comment, we can collaborate, they’re fully public to everybody, anybody can go

in and read them at any time.

The actual tools that you use to do this aren’t as important as just getting

into this process.

This first step — brainstorming — the first thing that I want to get across

when coming up with their growth ideas is that you want to brainstorm on the

inputs, not the outputs. If our OKR is basically set at improving some sort of

activation, we’re not going to sit there and say “How can we improve

activation rate?”, because the reality is is that whatever you’re trying to

improve, there’s probably a thousand different ways to improve it.

So, when you sit there and try to think about this thing that can be improved

in a thousand different ways, it’s really really hard to come up with a bunch

of growth ideas. So, the first thing that we do is we break this thing down

into pieces, as small of pieces as we possibly can. In a really simplistic

example, this product has an activation or onboarding flow that has three

steps.

We would basically break it down into three steps, and then we would go

through each step systematically, and brainstorm ideas around each one of

those steps. Basically narrowing the scope of our brainstorm to each one of

these steps makes it much much easier to come up with more specific ideas

about how we can basically improve these inputs, which will lead to an

improved output.

So, there’re really four ways that we use to generate growth ideas and, to be

honest, I blatantly stole this from a book called “The Innovators Solution”. I

recommend everybody to read it, but the four ways.So, let’s dive into each one

of these individually. And so, along this process, there’re really four key

documents that we keep that really help us drive this process.

Brainstorm

Observe

So we do look at how others are doing it both in our competitive space and

non-competitive space. We’ll do an exercise — one of the last things we worked

on was optimizing our referral flow — we sat down as a group for 20 minutes,

everybody on the team picked two or three products that they use on an active

basis, and we walked through as a group. Basically, their referral flow talked

about what are the things that they do well, what are the things that we

notice, what are the things that we could have done better, and that process

ends up generating a ton of ideas for us. But whenever we go through these

exercises, I just want to reiterate my point, is that we always take these

things as inspiration, not prescription. And so we’re always looking for ways

to adapt what others are doing, specifically to our product to our audience.

Questioning

So one specific exercise in this category is we do something called a

“Question brainstorm”, and what that is is about an hour meeting. Basically,

the first 15 to 20 minutes, what we do is we ask nothing but questions.

There’s no discussion, there’s no, answers, we basically just write down

questions on little post-it notes, we announce what the question is, and we

put it on the board.

We try to ask as many questions as we possibly can within that given time

period, anything from the why’s, the what is, the what-ifs, what about,

everything about our audience, the specific, OKR that we’re setting, and what

this does is two things: reveals the things that we don’t know, the second is,

typically, any good answer starts with a good question.

And so these questions basically give us a pipeline for our PMs or our data

scientist to start really digging into, and as we learn some of the answers of

these questions, a ton of ideas and how we can play off of those answers tend

to pop out of that process.

Associate

There’s a technique called ‘smashing’, you basically take what you’re trying

to improve and smash it with something that’s completely unrelated. So, one of

the audiences for one of our new products is basically salespeople, so

something that we did is we looked, and we asked the question:

“What if our activation process was like closing a deal?”

The thing that salespeople our audience finds the most exciting thing. What

would the language look like? What type of language would we be using if it

was like closing a deal? What type of emotions do they feel that we try to

evoke during this process? And how can we bring out all of those elements that

most people would think would be completely unrelated, but in our activation

flow?

Network

I think and I hope that most of you are here today is that it’s just finding a

good network of other growth people. I’m on the phone probably almost every

day with a lot of people who are going to be here at this conference today,

exchanging ideas, talking about the things that we’re trying — what worked

what didn’t work, why we think we didn’t work, and I take a lot of those ideas

and we feed them into this process, and adapt and use it as inspiration.

So, out of all of these exercises, these brainstorms, we don’t do every single

one for every set of OKRs, we pick one or two. We end up with basically this

backlog, and, basically, once again is just a list of ideas, where they are in

the process, what category they’re associated with, and then we’ll talk about

some of these things on the right-hand side in terms of the prediction and the

resource estimate soon.

Prioritize

After we brainstorm, the biggest thing is that we need to prioritize, and so

as humans, we tend to do a few things: before we dive into an idea, we tend to

overestimate the probability of success. After we implement things, would

probably inflate the impact of that success, and we also underestimate the

amount of work it’s going to take to actually test and implement this thing.

So, it’s really important to find a way and force yourself to be brutally

honest on these three elements, and so we look at our top ideas in the backlog

,and we always compare them on three components:

-

the probability that we think it’s going to be accessible

-

the impact that it will have if successful

-

the resources required to test and implement

The first and the third, we do it very quickly. Probability, we just give a

‘low’, ‘medium’, or ‘high’ — something that has a ‘high’ probability of

success is typically based off of a previous experiment where we learn

something very concrete, and now we’ve got an idea on an iteration of that

based on our learnings. Something ‘low’ is probably when we’re venturing into

brand new territory — a new channel or something that we know nothing about,

and resources, just rough estimation on marketing design and engineering — one

hour/a half day, one day/one week.

The impact is probably the most important one out of these three, and is

probably one that most people miss. And so, every single experiment idea,

everybody has to come up with a hypothesis, which pretty much comes down to

this formula: ‘if successful, this variable that we’re trying to target will

increase by this much because of these assumptions…’

This format is really, really key; we’re basically making a prediction of what

we think is going to happen, and is revealing the assumptions that we’re

making behind that prediction. Most people look at this and say “well I have

no idea how this is going to work”, and that’s the point — to actually think

through of why we think this is actually a good idea and why we think it’s

going to work.

And there tends to be more data to make this prediction than most people

think, and so the three places that we look to justify our assumptions are

quantitative, qualitative, and secondary data.

Quantitative

We typically get from all of the data collection around our product. We’ll

look at a lot of previous experiments and sort of the data of those and what’s

released, so, a really simple example would be if we found that testimonials

helped us increase the conversion on our invite page, we might look for other

places in the product for us to use testimonials — increase the page of that.

And we know what the percentage uplift that that testimonial had on that

invite page, and we can make some sort of analogy or some sort of parallel or

comparison of what percentage of uplift we expect on those other pieces of the

product.

Qualitative

So surveys support emails, user testing, different recordings, we use those if

we can start to detect patterns coming out of this qualitative data. That’s

enough to help us justify one of our assumptions,

Secondary

And, of course, there’s secondary, networking blogs, competitor observation,

anything that I get from my growth network.

If they tell me they saw this sort of lift, it’s at least a data point to give

us some sort of indication, rather than pulling things out of thin air. So, we

always try to prioritize it, once again, against these three things, and a lot

of times, if we’re weighing between a few things that have very high

probability of success — lower impact but very low on resources — I would

rather crank those things out, then work on something that has really low

probability, potentially very high impact and but very intensive

resource-wise.

We’ll do the low resource, intensive things first, before we do the high

intensive resource things because the more experiments you get in, the more

successful ones you get in, the faster your growth curve.

It sort of builds on itself, and so we take these assumptions in this

hypothesis, and we put it in the experiment doc. This is where we start to

create the experiment doc, so first it’s just the objective — we state what

we’re trying to do, our hypothesis in that format, and then we list out the

assumptions.

These numbers are made up, so if they don’t add up, I apologize. But,

basically, now we can sit down and we can weigh this against all the other

experiments in our pipeline comparatively, and say “well, what do we expect

out of this one compared to this one? How do we feel about these assumptions?”

And allows other people on a team, allows me to challenge the person’s

assumptions, and hopefully get more and more accurate over time.

So, once we have these things prioritized, what we do is we try to design a

Minimum Viable test. I’m sure a lot of you are familiar with the Minimum

Viable Product, this is the same thing, except it’s the minimum thing we can

do to understand or get data around our hypothesis.

A very simple example of this is when we are optimizing our referral in our

invite flow, one of our products we give away a month free for every person

you invite. We wanted to test what would happen if we gave away two months, or

three months free for every person invited.

Or what if we went in the opposite direction and we only gave one month free

for two or three people they invite? And instead of implementing this whole

system code-wise, and in the accounting behind that system, all we did was we

change the landing page in the language around it, and we look to see how

behavior changed.

That took us maybe two hours, compared to days of engineering resources it

would take to basically fully implement it.

And so as we think about this Minimum Viable test, we’ll outline the Minimum

Viable test in the experiment doc. So, this is an example of very simple

homepage experiment where we’re testing three different themes with a couple

variations per theme.

This experiment is the outline of the experiment is meant to be so that if

anybody else wants to go back and is looking at this experiment, and trying to

understand the learnings or possibly repeated or iterate off the experiment,

they pretty much can repeat that experiment without having to talk to you

within a certain degree.

It’s it’s not super detailed, this shouldn’t take you that long but it’s used

for that purpose, as well as forcing you to think about the experiment

beforehand and what you need to do to get a valid result.

The number of times I’ve been through where we dive into an experiment — we’ve

run the experiment we get the results, and then we’re like “oh crap we forgot

to control for this one variable…” and then we have to go back through the

whole process again.

That used to happen a lot before we implemented this, and so just taking the

five minutes to think about this beforehand really helps with that.

So, we look at three things when we analyse the success or failure of an

experiment.

Digging into the question ‘why?’ will help you, once again, go back and start

understanding things about your customer, your channel, and your product, and

will lead to basically iterations and new ideas of the experiments that you

should probably run next.

If you don’t ask this question ‘why?’ and you just blindly move on to more

experiments, you’re just blindly running experiments. You’re not actually

taking the learnings, and building and working towards something that’s more

successful.

So, when we go through this analysis, this is all the homepage variations, we

look at every step of the funnel. So, the views, the click-through rate, the

conversion of registrations, the activation rate, and then basically the end

ratio.

And we’ll look at this experiment and we’ll basically take the learnings. What

were the winners, the actual experiment when we ran this, what we found were

some variations were activating people much better than others, and so we used

those elements in the variations that were activating people much better, and

we utilize them — we actually took them off the homepage, and we threw them in

the activation process, which actually helped the activation process even

more.

Anyways, point is extract as many learnings as you can out at every

experiment, list the next iterations, the action items, and throw those action

items back into the backlog and take these learnings. Go back to your backlog,

look at your prioritization of your next ones, and adjust your predictions

based off of your most recent learnings.

Then, of course, step six is systemized. So, once you find things that are

successful, we first, once again, try to productize them. Do as much as you

can with technology and engineering. And the second thing is the things that

you can’t do with technology and engineering; you build into Playbooks.

And so as you hire and you scale the team, you have these Playbooks that you

can just point people to, and they can repeat these things with pretty much

minimal effort. You’ve retained all of that knowledge that you’ve built up and

learned through all these experiments.

So, you do this over, and over, and over again — as many times as you possibly

can within this 30–90 day period of OKRS, you take all of this analysis, all

these learnings, constantly feed it back into the backlog.

Constantly refine your predictions so that you keep building and off of this

base of knowledge.

And so what a typical week looks like for one of our team is that on Monday we

have a 60–90 minute meeting, it is the only meeting we have all week. The

things that we go through is that we start the meeting off personal learnings,

every single person states one thing they learned about our product or channel

last week.

If everybody not learning at least one thing then something is seriously

wrong. We’ll then review our OKRS, our progress against them. And if we’re not

on track, then we will talk about how we get back on track.

And then we go through the experiments; we pick out the key experiments that

lead to most analysis. ANd the people who owned the experiments talk about

what they learned, they share the learnings, and based off the shared

learnings, we talk about what are the experiments that we’re going to start

this week.

The rest of the week is dedicated to the other steps, we predict, prioritise,

implement, analyse, systemise, and we constantly try to repeat that cycle as

much as possible. And so we get into this cycle of constantly zooming out.

Once, again, setting those OKRS, thinking about what is the most impactful

things that we can do. Zoom in, run as many experimentation cycles as we

possibly can. Zoom out, zoom in, zoom out.

And so this process helps you, instead of constantly having to stop and think

about “Am I working on the right things?” You take that time in the zoom out

phase to think about that thing, and in the 30/90 period, you’re just cranking

through this experimentation period as fast as possible.

And after a few of these cycles, we’ll take a step back; well look at

macro-optimization. And we’ll look at three things, we’ll look at our batting

average, so how many successes to failures, and are improving over time?

The second thing is accuracy, so are hypothesis getting more and more

accurate, especially in channels that we’ve got the most history, so the more

you work on a channel, the more accurate you should probably get.

And the third is throughput, so how many experiments are you running in a

given period and how can you do more you can typically optimise these things

in three ways: you can get better with the process, with the team, or with

your tools and instrumentation.

We’ll probably do this on a quarterly basis, or once every three/four months,

and that way we are constantly optimising this process.

And that’s it, thank you.

End

Related: Be systematic in your analysis of existing customers to avoid

distributing content to the wrong people and paying the

price

And also see: How to organise content to rank on the first page of

Google